The emergence of natural language processing (NLP) technologies is exemplified by cutting-edge machine learning models which are known as large language models (LLMS). However, the technical and implementation details behind these models require a tight grasp on advanced mathematics and computer science. Luckily, web giants such as amazon web services (AWS) offer these models as a web service, where using such a model boil down to a simple API call with the right prompt. In this article we will teach you how to do it by utilizing Meta’s LLAMA2 LLM model in amazon bedrock. We will use Laravel in this tutorial as the backend framework with PHP. Let’s dive into the topic.

Getting Access

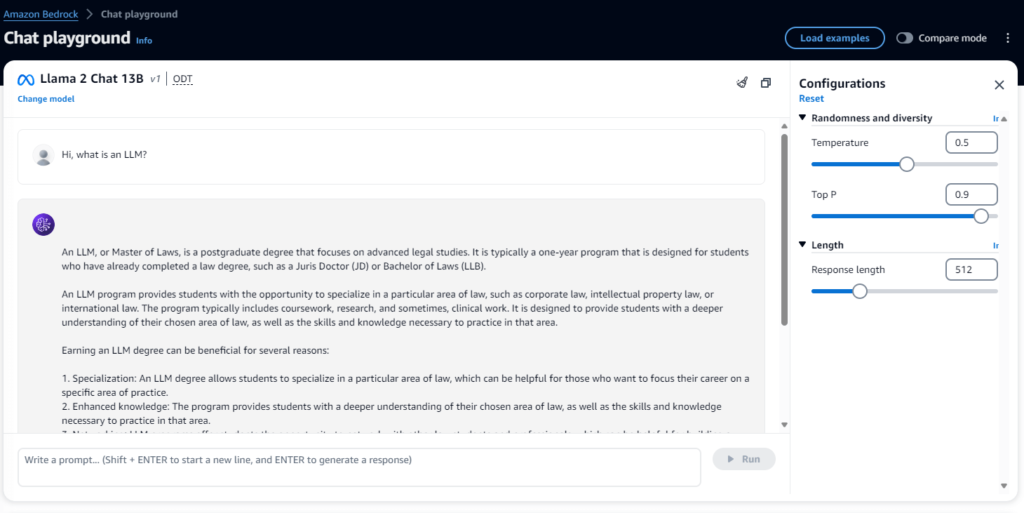

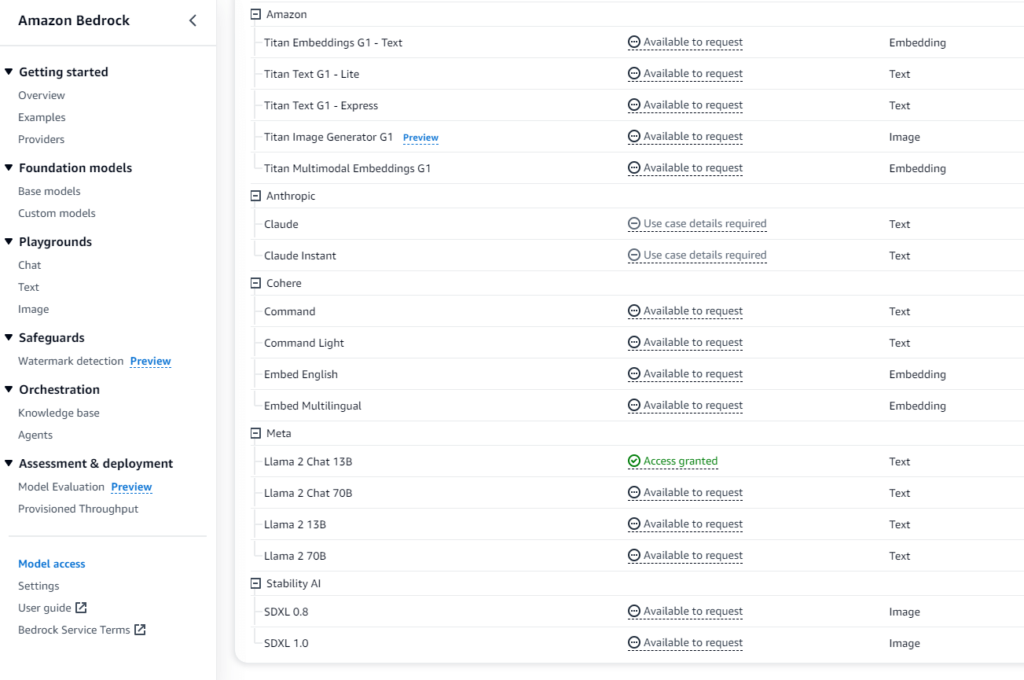

First, you need to create an AWS account if you do not already have one. Once you have an AWS account, navigate to the Amazon Bedrock page by clicking here. And then navigate to Model access and request access to the required model (Llama 2 Chat 13B in this case) as shown in Figure 1. Once you request access you will be granted access in a short while.

Next, you can start playing around the model right away in chat playgrounds (In the menu navigate to Playgrounds → Chat). You can write a prompt here as shown in Figure 2, and the response will come. Also, you can easily modify the model parameters (Temperature, top-P and response length in this case) in the UI. This is the best place to experiment with your model hands on.

Create AWS Access Key

Log in to the AWS console and navigate to the IAM dashboard.

In the left navigation pane, select Users.

Select the user for whom you want to create an access key.

Click on the Security credentials tab.

Under the Access keys section, click on Create access key.

A new access key will be created. You can download the access key ID and secret access key as a CSV file or copy them to your clipboard.

Please note that access keys are long-term credentials for an IAM user or the AWS account root user. You can use access keys to sign programmatic requests to the AWS CLI or AWS API (directly or using the AWS SDK) 1. It is important to manage your access keys securely and not provide them to unauthorized parties.

Configuring AWS CLI

Before getting started with the development of AI chat bot integration, you must first configure AWS CLI.

You need to install AWS CLI on your computer. You can download the installer from the AWS website.

After installing the AWS CLI, you need to configure it with your AWS access key ID and secret access key. You can do this by running the aws configure command in your terminal or command prompt. The command will prompt you to enter your access key ID, secret access key, default region name, and default output format.

Install AWS SDK for PHP

Although AWS APIs can be accessed directly by sending requests to the respective endpoints, the easiest and the recommended way is to access it through AWS SDK. AWS provides Software Development Kits (SDKs) for many popular technologies and programming languages. Using AWS SDKs can make development more efficient by providing pre-built components and libraries that can be incorporated into applications. These components save developers significant time previously taken on coding and debugging from scratch. SDKs also enable faster deployment by providing tools that let developers build and integrate applications quickly.

You can install AWS SDK for PHP using Composer by running the following command in your terminal or command prompt:

composer require aws/aws-sdk-php

Developing LLAMA2 Powered Chat Bot

Finally, you can start coding the chatbot. First, you need to initialize the bedrock runtime client in the constructor of your class. For this you need to include:

use Aws\BedrockRuntime\BedrockRuntimeClient;

The client can be initialized as follows:

$this->client = new BedrockRuntimeClient([

'version' => 'latest',

'region' => 'us-east-1'

]);

The AWS SDK version and the region for AWS are specified in the client creation.

Next, the most important thing when interacting with an LLM is getting your prompt right. Prompt is an instruction written in natural language, that you provide as an input to the LLM. A part of this prompt comes from the user’s response, which can be a question the user asked from the chat bot. One technique to direct the conservation forward is to include an instruction text at the beginning of the prompt before the user’s question. An example prompt would be:

protected $instruction = <<<EOD

[INST]You are now chatting with a friendly assistant! Feel free to ask any questions or share your thoughts. [/INST]

EOD;

Finally, we can invoke the configured LLM model via AWS bedrock client. In this example we consider a function that implements an endpoint in the controller. When a user has inputted text in the UI a request will come to this endpoint.

public function getChatResponse(Request $request)

{

// Method to handle chatbot requests

$input = $request->input('input');

$formattedPrompt = sprintf("%s\n%s\n", $this->instruction, $input);

$session = $request->input('session');

$response = $this->client->invokeModel([

'modelId' => 'meta.llama2-13b-chat-v1',

'body' => json_encode([

'prompt' => $formattedPrompt,

'max_gen_len' => 128,

'temperature' => 0.1,

'top_p' => 0.9

]),

'accept' => 'application/json',

'contentType' => 'application/json'

The formatted prompt is simply created by appending text sent by user into the instruction text. Now you have an AWS powered chat bot at your disposal!

Code gist: https://gist.github.com/dilin993/fca35e7af1d3575853b91630a78afa4a

**This article is from Xoftify.com

Tags:

- ai

- chat bot

- LLM

- Machine learning

- ml

- tech

- technology